Florida based composer David Ott, currently serving as the music director and Maestro with the Panama City POPS (in Panama City, Fl) reached out to composer, arranger, and multi-instrumentalist David Goldflies a few months ago to see if there was any interest in the Allman Goldflies Band performing songs from its first album release, Second Chance with the POPS.

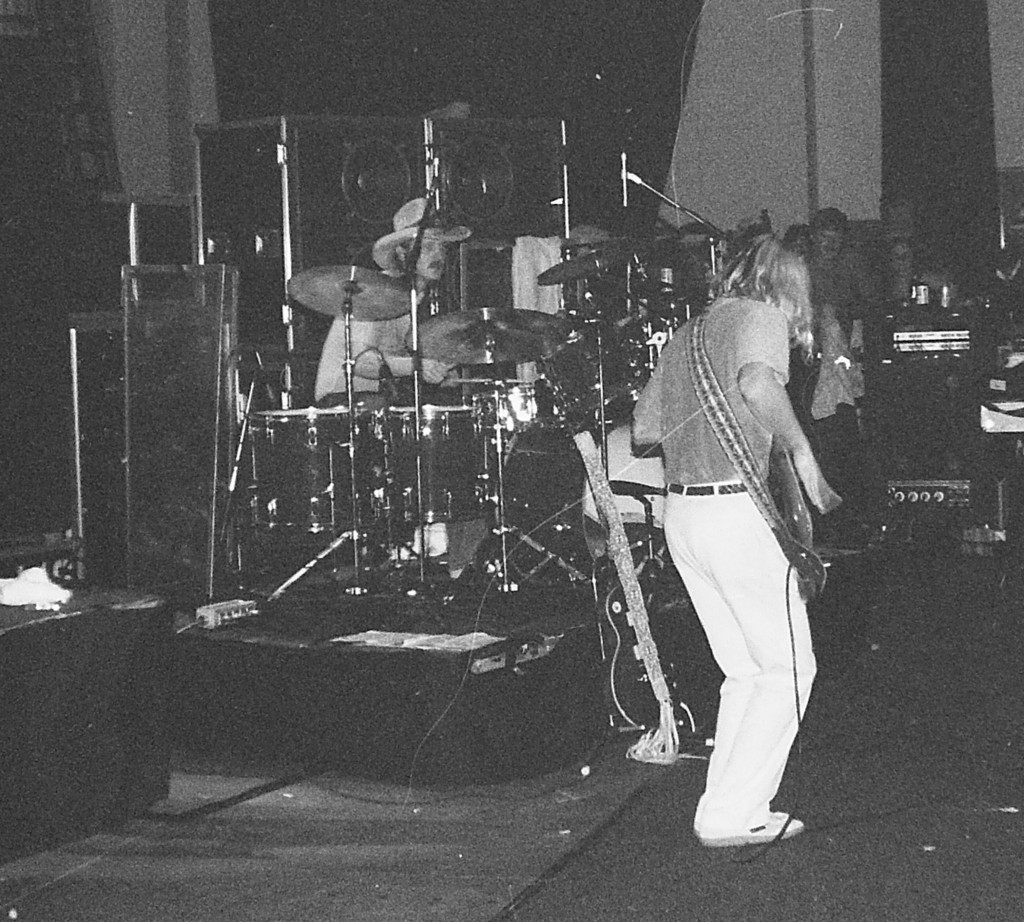

That one question resulted in the upcoming performance of music by Gary Allman and David Goldflies (one of the former bassists of the Allman Brothers Band) along with rich orchestrations penned by composer David Ott.

The music of the Allman Goldflies Band can be heard on Spotify, Youtube, Itunes, and other digital music outlets. The performance on Oct. 13, 2018, at Sweetbay in Panama City, Fl. will result in high def video and audio recordings.

The group (AGB and the PC Pops) will also perform two Allman Brothers classics – Whipping Post and Midnight Rider. We felt that the typical classical music fans of today are made up of many of those that knew and loved the music of the 70’s and would enjoy hearing the rich sound of the orchestra with the rockin’ sound of the AGB. And for those more traditional listeners, this concert will give them the opportunity to expand their listening zeitgeist and perhaps discover a whole new way to listen to an orchestra.